An overview on the history of AI live captions and when to use English automatic captioning

The history of AI captions is a long one, and it goes back to the early days of computers. In the 1960s, researchers at Bell Labs began working on an experimental system that could convert speech into text.

In 1974, researchers at Stanford University created a program called SHRDLU that had the ability to understand simple sentences in English and respond with natural language responses like "yes" or "no."

A team of researchers from Carnegie Mellon University developed the first AI captioning system in the 1990s. It was based on a combination of neural networks and natural language processing. The system could identify words in an image, but it could not connect those words to create a sentence or paragraph.

The breakthrough came in 2014 when a team of computer scientists at Stanford University were trying to develop a machine learning algorithm that could help robots understand language.

How does AI captioning work?

In a nutshell, the AI creates captions through speech to text. AI captioning works by using machine learning algorithms to identify spoken words and then automatically generate live captions. These algorithms are trained on thousands of hours of data before they're released for public use, and they're constantly improving as more people use them.

Part of the process that is involved is known as automated speech recognition (ASR). From the received audio, the AI can match speech to a machine-readable format, in this case text. The AI is also trained to differentiate between background noise, like crowd cheering, and actual speech.

To generate a caption that matches the audio in both meaning and tone, context is necessary. Without context, the AI could not differentiate between the word “to” or “two”, for example.

In addition, you can make AI captions even more accurate by providing the AI system with what is called AI vocabulary. Creating a glossary of proper names, acronyms, and technical terms that are likely to be used in a live session or event will help AI recognize and accurately transcribe those words.

At Interprenet, we often work with glossaries and feed the AI glossary terms before a client’s live event or work meeting using the Interprefy platform, a robust language accessibility application.

When to use AI captions?

AI live captions are used for a wide range of applications, including:

- Conference Calls: Users can use AI live captioning while participating in video conferences on Zoom, MS Teams, or other common video conferencing platforms. At Interprenet, we can also provide AI captions outside of these native applications.

- Events or Conferences: Event attendees can follow along the presentations or keynotes with AI live captions.

- Academia and Schools: Students with accessibility needs can use AI live captioning to study lectures and online classes without having to ask for help from others.

- Corporate Training or Webinars: AI live captions help participants gain an even better understanding of the content that's delivered in real time.

- Town Halls: Governments and municipalities that stream town halls or board meetings for their citizens use AI live captions to make these events more accessible.

At Interprenet, our clients come from a variety of industries and have different reasons for wanting to incorporate AI captioning into their live meetings or events. For most clients, however, it’s about accessibility, efficiency, and accuracy of understanding.

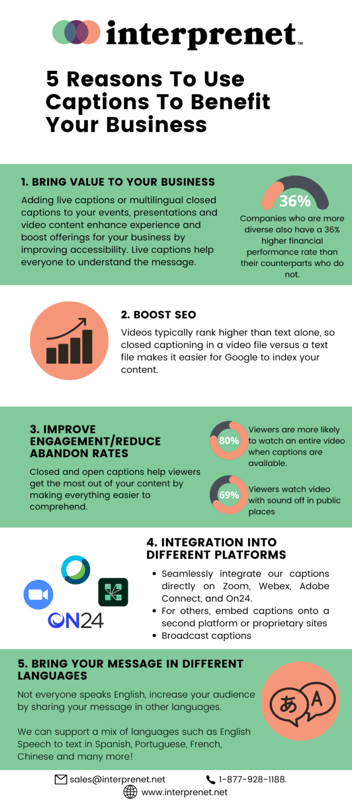

What are the benefits of AI captioning?

AI live captioning makes your content instantly accessible as it is delivered to screens in real time. At Interprenet, we use industry-leading captioning technology that is scalable and compatible with leading meeting platforms. Our clients can trust that their content will be seen by those who benefit from live captioning on any web-enabled device.

- Accessibility: According to the World Health Organization, over 5% of the world’s population experience hearing loss – that’s about 430 million people. Adding live captions to your content ensures that your content is available to everyone.

- Audience engagement: Captions are ubiquitous in our daily lives, especially on audio or video streams on social media or podcasts. It’s beneficial to include live captions in business settings to keep engagement high and meet audience expectations.

- Increase comprehension: Captions provide clarity to viewers when people’s names or technical terms are mentioned. They also aid in understanding different accents or someone speaking fast.

- Benefits for international audiences: In a globalized business world, viewers who know English as a second language benefit from live captions as they can follow along with the speech.